Built-in Jobs: Difference between revisions

No edit summary |

|||

| (45 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

Obsidian comes bundled with free jobs for common tasks. These are provided in addition to [[Scripting Jobs]] to reduce implementation and testing time for common job functions. | Obsidian comes bundled with free [[Admin_Jobs|jobs]] for common tasks. These are provided in addition to [[Scripting Jobs]] to reduce implementation and testing time for common job functions. | ||

As of '''Obsidian 2.7.0''', we have open-sourced our built-in convenience jobs under the [[https://opensource.org/license/mit/ MIT License]]. In the root of the installation folder, you can find the source in <code>obsidian-builtin-job-src.jar</code>. | |||

= File Processing Jobs = | = File Processing Jobs = | ||

| Line 34: | Line 36: | ||

* ''matchesSize'' - Determines if a file matches size conditions, or true if none are configured. | * ''matchesSize'' - Determines if a file matches size conditions, or true if none are configured. | ||

* ''processDirectory'' - Enumerates files in configured directories and calls ''shouldDelete'' and ''deleteMatchingFile'' as necessary. | * ''processDirectory'' - Enumerates files in configured directories and calls ''shouldDelete'' and ''deleteMatchingFile'' as necessary. | ||

'''Sample Configuration''' | |||

[[Image:FileCleanup.png]] | |||

== File Archive Job == | == File Archive Job == | ||

| Line 57: | Line 64: | ||

* ''GZIP (Boolean)'' - Should the target archive file be compressed using GZIP? | * ''GZIP (Boolean)'' - Should the target archive file be compressed using GZIP? | ||

* ''Overwrite Target File (Boolean)'' - If the archive file to be created already exists, should we overwrite it, or fail instead? | * ''Overwrite Target File (Boolean)'' - If the archive file to be created already exists, should we overwrite it, or fail instead? | ||

* ''File Size Minimum (String)'' - The minimum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb). As of Obsidian 6.0.1 | |||

* ''File Size Maximum (String)'' - The maximum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb). As of Obsidian 6.0.1 | |||

* ''Minimum Time Since Modified (String)'' - The minimum age of a file to be deleted, based on the modified time. This can be specified in seconds, minutes, hours and days (e.g. 10s, 5m, 24h, 2d). As of Obsidian 6.0.1 | |||

=== Customization === | === Customization === | ||

| Line 66: | Line 76: | ||

* ''processFile'' - For each input file, determines the archive file name and copies the original file to the archive. | * ''processFile'' - For each input file, determines the archive file name and copies the original file to the archive. | ||

* ''processDelete'' - If the original file is to be deleted, this method handles deleting the original file. If deletion fails, the job will fail, so if you wish to change his behaviour, you can override this method. | * ''processDelete'' - If the original file is to be deleted, this method handles deleting the original file. If deletion fails, the job will fail, so if you wish to change his behaviour, you can override this method. | ||

'''Sample Configuration''' | |||

[[Image:FileArchiveJobConfig.png]] | |||

== File Scanner Job == | |||

'''Job Class:''' <code>com.carfey.ops.job.maint.FileScannerJob</code> | |||

''Introduced in version 2.2'' | |||

This job locates files in a directory matching one or more file masks (using Java regular expressions) and stores the result via source job results stored under the name <code>file</code> as absolute paths (Strings). | |||

This job is intended to be conditionally chained to a job that knows how to process the results. | |||

''Go File Pattern'' may used to ensure that files in the configured ''Directory'' are only picked up and saved if an expected token file exists. If ''Delete Go File'' is set to true, any found token files will be deleted. If this deletion fails, the job will abort and no <code>file</code> results are saved. Successfully deleted go files are stored as job results under the name <code>deletedGoFile</code>. | |||

The job supports the following configuration options: | |||

* ''Directory (String)'' - A directory to scan for files. | |||

* ''File Pattern (1 or more Strings)'' - One or more file patterns (using <code>java.util.regex.Pattern</code>) that a file name must match to be considered a match. If any one of the configured patterns is a match, the file is considered a match. | |||

* ''Go File Pattern (String)'' - A file pattern (using <code>java.util.regex.Pattern</code>) that must match at least one file in in the ''Go File Directory'' (or main ''Directory'' if not supplied) in order for files to be processed and saved as job results. | |||

* ''Go File Directory (optional String)'' - The directory where we look for files matching the ''Go File Pattern''. If missing, the value for ''Directory'' is used. | |||

* ''Delete Go File (Boolean)'' - If we find one or more go files, should we delete them? If deletion fails, the job will fail. | |||

* ''File Size Minimum (String)'' - The minimum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb). As of Obsidian 6.0.1 | |||

* ''File Size Maximum (String)'' - The maximum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb). As of Obsidian 6.0.1 | |||

* ''Minimum Time Since Modified (String)'' - The minimum age of a file to be deleted, based on the modified time. This can be specified in seconds, minutes, hours and days (e.g. 10s, 5m, 24h, 2d). As of Obsidian 6.0.1 | |||

=== Customization === | |||

If you wish to tweak or customize behaviour of this job, it can be subclassed. The following methods may be overridden or extended by calling ''super'' methods: | |||

* ''onStart'' - Called on job startup. | |||

* ''onEnd'' - Called on job end, regardless of success or failure. | |||

* ''listFiles'' - Lists files within the directory using the supplied patterns. | |||

* ''postProcessFiles'' - This is a hook method called after all job results are saved and go files are deleted if applicable. It is called with all valid files that were found. | |||

* ''deleteGoFiles'' - Deletes all found go files, saving a result if successful and throwing a RuntimeException if failing. This can be overriden to customize handling of failures. | |||

'''Sample Configuration''' | |||

[[Image:FileScannerConfig.png]] | |||

= Maintenance Jobs = | = Maintenance Jobs = | ||

These jobs are provided to help maintain the Obsidian installation. | These jobs are provided to help maintain the Obsidian installation. Automatic schedule configuration can be disabled via <code>com.carfey.obsidian.standardOutputStreamsEventHook.enabled=true</code> configuration. | ||

== Job History Cleanup Job == | == Job History Cleanup Job == | ||

| Line 78: | Line 128: | ||

The value for <code>maxAgeDays</code> corresponds to the maximum age for records to retain. By default it is set to 365, but you may configure it to any desired value. | The value for <code>maxAgeDays</code> corresponds to the maximum age for records to retain. By default it is set to 365, but you may configure it to any desired value. | ||

As of 3.0.1, the value for <code>maxAgeScheduleDays</code> corresponds to the maximum age for expired job schedules to retain. If this isn't specified, expired job schedules won't be deleted. | |||

As of 3.4.0, the value for <code>maxAgeDays</code> is used to prune expired job schedules and <code>maxAgeScheduleDays</code> is no longer used. | |||

'''Note:''' We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time. | '''Note:''' We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time. | ||

'''As of Obsidian 5.2.1''', this job is configured during new installations to run '@daily' keeping the last 380 days of history. | |||

== Log Cleanup Job == | == Log Cleanup Job == | ||

| Line 94: | Line 150: | ||

'''Note:''' We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time. | '''Note:''' We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time. | ||

'''As of Obsidian 5.2.1''', this job is configured during new installations as follows: | |||

* 1:00 AM for DEBUG/TRACE keeping the last 30 days of event logs. | |||

* 1:30 AM for INFO keeping the last 60 days of event logs. | |||

* 2:00 AM for WARNING/ERROR keeping the last 120 days of event logs. | |||

* 2:30 AM for FATAL keeping the last 185 days of event logs. | |||

== Notification Cleanup Job == | |||

''Introduced in Obsidian 4.9.0'' | |||

'''Job Class:''' <code>com.carfey.ops.job.maint.NotificationCleanupJob</code> | |||

This job will delete notification database records beyond a configurable age in days. Optionally you may constrain this to specific subscribers. This is useful for keeping the database compact and clearing out old, unneeded data. | |||

The value for <code>maxAgeDays</code> corresponds to the maximum age for records to retain. By default it is set to 365, but you may configure it to any desired value. | |||

The value for <code>subscriber</code> corresponds to the subscriber address(es) of whose notifictaions should be deleted. No validation is performed against this value to allow for subscriber changes without requiring corresponding job configuration changes. | |||

'''Note:''' We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time. | |||

'''As of Obsidian 5.2.1''', this job is configured during new installations to run at 3:30 AM on the 1st of the month keeping the last 120 days of history. | |||

== Disabled Job Cleanup Job == | |||

''Introduced in Obsidian 3.7.0'' | |||

'''Job Class:''' <code>com.carfey.ops.job.maint.DisabledJobCleanupJob</code> | |||

This job cleans up jobs that have been disabled for the last configured number of days and have no future non-disabled schedule windows. | |||

The value for <code>maxDisabledDays</code> corresponds to how many days in the past a job must have been disabled to be a candidate for being deleted. | |||

'''Note:''' We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time. | |||

'''As of Obsidian 5.2.1''', this job is configured during new installations to run at 3:00 AM on the 1st of the month keeping the last 185 days of history. | |||

= Script Job = | |||

This job is a convenience job to execute any script, command or other executable file on any platform. | |||

'''Job Class:''' <code>com.carfey.ops.job.script.ScriptFileJob</code> | |||

''Introduced in Obsidian 2.3.1'' | |||

This job enables you to specify an existing script, command or other executable to invoke/execute. Validation of the file's existence and executable state is done only at runtime. You must ensure that the file exists or is accessible via environment variables and in an executable state on all Obsidian Hosts in the cluster or restrict the job to the necessary [[Admin_Jobs#Advanced_Options|Fixed Hosts]]. This job uses [http://docs.oracle.com/javase/7/docs/api/java/lang/ProcessBuilder.html ProcessBuilder], so refer to it for expected behaviour. | |||

As of ''Obsidian 3.8.0'', this job supports best-effort interruption as an [[Implementing_Jobs#Interruptable_Jobs|Interruptable job]]. It is best effort in that it only destroys the underlying process that invoked the script, but cannot ensure that anything the script has invoked has terminated. | |||

* ''Script with Arguments (1 or more Strings)'' - The first value is a path to the execution script (or other executable), which is typically fully qualified, or relative to the working directory. The additional values are any required arguments for the command. On *nix, if you are invoking a script, you may need to prefix the command with "./", e.g. <code>./script.sh</code>. Corresponds to the [http://docs.oracle.com/javase/7/docs/api/java/lang/ProcessBuilder.html#ProcessBuilder(java.lang.String...) ProcessBuilder(java.lang.String...) constructor]. | |||

* ''Copy Obsidian Process' Environment (Boolean)'' - Do you want the runtime environment to be copied from that which is running the Obsidian process? Defaults to ''true''. If set to ''false'', the environment is cleared. See [http://docs.oracle.com/javase/7/docs/api/java/lang/ProcessBuilder.html#environment() ProcessBuilder.environment()]. | |||

* ''Success Exit Code (Integer)'' - The exit code that designates success. Any exit code not matching this value will result in a job failure. Defaults to ''0''. | |||

* ''Working Directory (String)'' - The desired working directory for the script. If no special working directory is required, set to the directory containing the target script. See [http://docs.oracle.com/javase/7/docs/api/java/lang/ProcessBuilder.html#directory() ProcessBuilder.directory()]. | |||

* ''Environment Parameter Key Value Pairs (0 or more pairs of Strings)'' - Key/Value pairs to be set on the execution environment. These values are set just before execution and will override any existing values copied from the Obsidian Process if ''Copy Obsidian Process' Environment'' was set to true. See [http://docs.oracle.com/javase/7/docs/api/java/lang/ProcessBuilder.html#environment() ProcessBuilder.environment()]. | |||

'''Sample Configuration''' | |||

[[Image:ScriptFileJobConfig.png]] | |||

== Note on Windows == | |||

When running the job on Windows, users often encounter an error like the following: | |||

<pre>Cannot run program "run.bat" (in directory "C:\test"): CreateProcess error=2, The system cannot find the file specified.</pre> | |||

This normally means you need to run the command through the Windows command interpretor, using <code>cmd /C</code>. | |||

To do this, instead of supplying the file to execute as a first value for ''Script with Arguments'', the supply the values "cmd" and "/C" as separate values, then any others you require, as illustrated below. | |||

<pre> | |||

Script with Arguments: | |||

cmd | |||

/C | |||

run.bat | |||

</pre> | |||

= MySqlBackupJob = | |||

''Introduced in version 2.4'' | |||

This is a convenience job to utilize the mysqldump utility to extract a backup and store on the filesystem. A timestamp is appended to the filename prefix specified to protect against overwrites. The optional mysqldump options are not validated and will result in runtime failures if they are invalid. Likewise, all paths specified are not verified and are only required to be available on the scheduler host at runtime. If the mysqldump executable is not found at runtime, change the ''mysqldump Executable'' to have the fully qualified path. | |||

* ''Fully qualified export path (String)'' - The directory where you want the backups stored. | |||

* ''Filename prefix (String)'' - The filename prefix to use for the backup files. | |||

* ''Username (String)'' - The user for the mysqldump command. | |||

* ''Password (String)'' - The user's password for the mysqldump command. | |||

* ''Database (String)'' - The database to backup. | |||

* ''Hostname or IP Address (optional String)'' - The host of the MySQL database. If not provided, assumes localhost. | |||

* ''GZip file? (Boolean)'' - Determines whether the backup file is compressed using GZip. | |||

* ''mysqldump option (0 or more Strings)'' - Any number of desired mysqldump options can be specified. | |||

* ''mysqldump Executable (String)'' - Fully qualified path to mysqldump executable. | |||

'''Sample Configuration''' | |||

[[File:MySqlBackupJobConfig.png]] | |||

= Shell Script Jobs = | = Shell Script Jobs = | ||

'''Deprecated as of Obsidian 2.3.1.''' Use [[#Script_Job|Script Job]] instead. | |||

These jobs are provided to give convenient access to shell scripting. ''Not supported on Windows-based platforms.'' | These jobs are provided to give convenient access to shell scripting. ''Not supported on Windows-based platforms.'' | ||

== Shell Script Job == | == Shell Script Job == | ||

'''Deprecated as of Obsidian 2.3.1.''' Use [[#Script_Job|Script Job]] instead. | |||

'''Job Class:''' <code>com.carfey.ops.job.script.ShellScriptJob</code> | '''Job Class:''' <code>com.carfey.ops.job.script.ShellScriptJob</code> | ||

| Line 107: | Line 260: | ||

== Shell Script Execution Job == | == Shell Script Execution Job == | ||

'''Deprecated as of Obsidian 2.3.1.''' Use [[#Script_Job|Script Job]] instead. | |||

'''Job Class:''' <code>com.carfey.ops.job.script.ShellScriptExecutionJob</code> | '''Job Class:''' <code>com.carfey.ops.job.script.ShellScriptExecutionJob</code> | ||

| Line 112: | Line 267: | ||

This job enables you to specify an existing shell script to execute. Validation of the script's existence and executable state is done only at runtime. You must ensure that the script exists in an executable state on all Obsidian Hosts in the cluster or restrict the job to the necessary [[Admin_Jobs#Execution_.26_Pickup|Fixed Hosts]]. | This job enables you to specify an existing shell script to execute. Validation of the script's existence and executable state is done only at runtime. You must ensure that the script exists in an executable state on all Obsidian Hosts in the cluster or restrict the job to the necessary [[Admin_Jobs#Execution_.26_Pickup|Fixed Hosts]]. | ||

= Miscellaneous Jobs = | |||

== Database File Export Job == | |||

'''Job Class:''' <code>com.carfey.ops.job.db.DatabaseFileExportJob</code> | |||

''Introduced in Obsidian 5.1.0'' | |||

This job produces a file export based on a database connection and a query. | |||

The job supports the following configuration options: | |||

* ''SQL Query (String)'' - The source query that produces the desired export results. | |||

* ''JDBC Connection Provider (optional Class)'' - A class implementation of [https://web.obsidianscheduler.com/obsidianapi/com/carfey/ops/job/db/DatabaseConnectionProvider.html DatabaseConnectionProvider] that provides a connection - allows for use of an existing connection pool or other means of obtaining a connection. If not used, must used the URL/username/password configuration for connections. | |||

* ''JDBC DB URL (optional String)'' - JDBC URL. Relies on JDBC driver class being available on the classpath. If not used, must used the JDBC Connection Provider for connections. | |||

* ''JDBC DB username (optional String)'' - JBDC database username. Relies on JDBC driver class being available on the classpath. If not used, must used the JDBC Connection Provider for connections. | |||

* ''JDBC DB password (optional String)'' - JBDC database password. Relies on JDBC driver class being available on the classpath. If not used, must used the JDBC Connection Provider for connections. | |||

* ''File type (String)'' - One of CSV, JSON, XML. | |||

* ''File name pattern (String)'' Filename patterns for the generated filename support the following tokens delimited by < and >: | |||

** <code><custom:paramname></code> is a means of automatically substituting in other parameters defined on the job. | |||

** <code><ext></code> is the last file extension (e.g. 'txt') | |||

** <code><ts:dateformat></code> corresponds to a timestamp formatted in the supplied SimpleDateFormat pattern (e.g. <code><ts:yyyy-MM-dd-HH-mm:ss></code>) | |||

** other literals outside of < or >, or inside of < and > but not matching any preceding tokens. | |||

* ''CSV Delimiter (optional String)'' - If CSV export is selected, can choose an alternative delimiter other than comma (,). Single character. | |||

* ''CSV Quote Character (optional String)'' - If CSV export is selected, can choose an alternative quote character other than double quotes ("). Single character. | |||

* ''CSV Escape Character (optional String)'' - If CSV export is selected, can choose an alternative escape character other than double quotes ("). Single character. | |||

* ''CSV Line End String (optional String)'' - If CSV export is selected, can choose an alternative line end string other than newline (\n). | |||

* ''Datetime format (String)'' - SimpleDateFormat format applied to java.sql.Timestamps. | |||

* ''Date format (String)'' - SimpleDateFormat format applied to java.sql.Dates. | |||

== REST Invocation Job == | |||

'''Job Class:''' <code>com.carfey.ops.job.rest.RESTInvocationJob</code> | |||

''Introduced in Obsidian 5.1.0'' | |||

This job calls a REST endpoint using the designated method and payload, if applicable. Expects the response code to be in the 2xx series to be a success, all others are treated as failures. Expects payload and response to always be Strings. Supports basic authentication. Response is saved to job results to allow for use by chained jobs. | |||

The job supports the following configuration options: | |||

* ''URL Target (String)'' - Endpoint URL. | |||

* ''REST API Method (String)'' - GET, PUT, POST, DELETE supported. | |||

* ''Payload (optional String)'' - Required for PUT/POST, not permitted for GET/DELETE. | |||

* ''Basic Authentication Username (optional String)'' - If wanting to add basic authentication, provide username and password. | |||

* ''Basic Authentication Password (optional String)'' - If wanting to add basic authentication, provide username and password. | |||

== Obsidian Execution Statistics Job == | |||

'''Job Class:''' <code>com.carfey.ops.job.schedule.ObsidianExecutionStatisticsJob</code> | |||

''Introduced in Obsidian 6.4.0'' | |||

This job runs against the job activity stored in Obsidian to collect execution statistics. These statistics are then available via the UI, the [[REST_API|REST API]] and [[Embedded_API|Embedded API]]. Job statistics use the data stored in job activity (history) and will not collect statistics for any history removed via [[Built-in_Jobs#Job_History_Cleanup_Job|Job History Cleanup Job]]. Likely you should align the ''maximumDurationLookback'' configuration value with a value that represents the most data kept. For example, if you keep 6 months of job history, you wouldn't benefit from a ''year'' lookback as the stats would match the ''six month'' stats. | |||

The job supports the following configuration options: | |||

* ''durationUnit (String)'' - What statistics unit to calculate. Valid values: seconds, minutes, hours. Defaults to minutes. Multiple allowed. | |||

* ''maximumDurationLookback (String)'' - How far back to collect statistics. One of day, two day, three day, five day, week, month, two month, three month, six month, year. Defaults to three month. Large durations will calculate and store stats for smaller durations; e.g. two day includes day. | |||

[[File:Obsidian-6.4.0-Obsidian-Execution-Job-Statistics-Config.png]] | |||

Latest revision as of 23:22, 26 November 2025

Obsidian comes bundled with free jobs for common tasks. These are provided in addition to Scripting Jobs to reduce implementation and testing time for common job functions.

As of Obsidian 2.7.0, we have open-sourced our built-in convenience jobs under the [MIT License]. In the root of the installation folder, you can find the source in obsidian-builtin-job-src.jar.

File Processing Jobs

These jobs are provided to provide common basic file operations, such as file cleanup (deletion) and archiving. These jobs can be used to reduce the amount of code your organization needs to write.

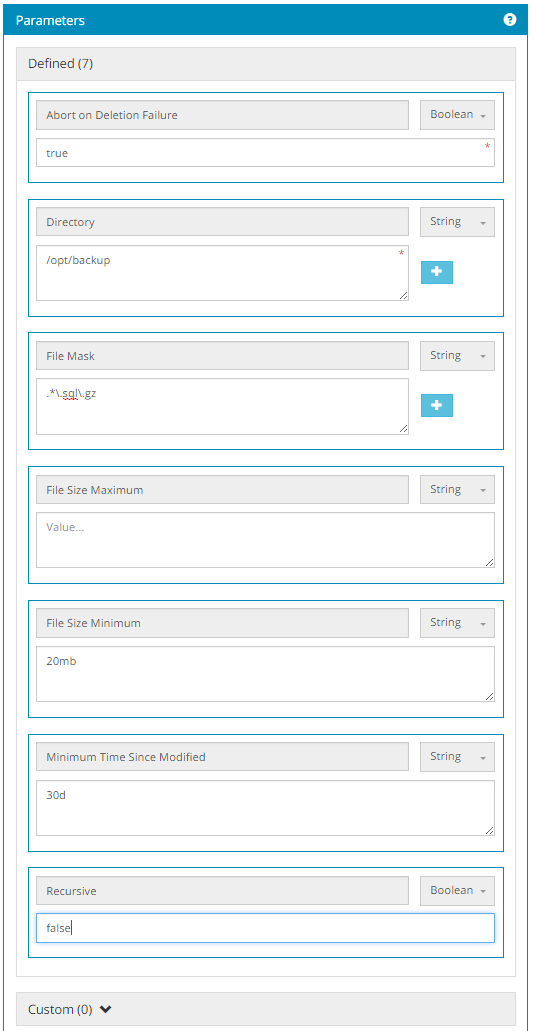

File Cleanup Job

Job Class: com.carfey.ops.job.maint.FileCleanupJob

Introduced in version 2.0

This job deletes files in specified paths based on one or more file masks (using regular expressions).

It supports the following configuration options:

- Directory (1 or more Strings) - A directory to scan for files. If recursive processing is enabled, child directories are also scanned.

- File Mask (0 or more Strings) - A regular expression that files must match to be deleted. This is based on

java.util.regex.Pattern. To match all files, remove all configured values or use ".*" without quotes. If multiple values are used, files are eligible for deletion if they match any of the file masks. - Abort on Deletion Failure (Boolean) - If deletion fails, should we fail the job?

- File Size Minimum (String) - The minimum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb).

- File Size Maximum (String) - The maximum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb).

- Minimum Time Since Modified (String) - The minimum age of a file to be deleted, based on the modified time. This can be specified in seconds, minutes, hours and days (e.g. 10s, 5m, 24h, 2d).

- Recursive (Boolean) - Whether files in subdirectories of the configured directories should be checked for matching files.

Customization

If you wish to tweak or customize behaviour of this job, it can be subclassed. The following methods may be overridden or extended by calling super methods:

- onStart - Called on job startup.

- onEnd - Called on job end, regardless of success or failure.

- shouldDelete - Determines if a file should be deleted.

- deleteMatchingFile - Deletes a matching file and saves a job result for its path.

- matchesAge - Determines if a file matches any age conditions (i.e. Minimum Time Since Modified), or true if none are configured.

- matchesMask - Determines if a file matches any configured file masks, or true if none are configured.

- matchesSize - Determines if a file matches size conditions, or true if none are configured.

- processDirectory - Enumerates files in configured directories and calls shouldDelete and deleteMatchingFile as necessary.

Sample Configuration

File Archive Job

Job Class: com.carfey.ops.job.maint.FileArchiveJob

Introduced in version 2.0

This job archives files passed in via a chained job's saved results. Therefore, it is intended to be used as a chained job only. One or more accessible absolute file paths can be passed in via job results called 'file'. Each one will be processed for archiving. For example, if you have a job that generates two files called 'customers.txt' and 'orders.txt', you will use Context.saveJobResult(String, Object) to save a value for each absolute file path (e.g. 'C:/customers.txt' and 'C:/orders.txt').

It supports the following configuration options:

- Archive Directory (1 or more Strings) - A directory to where an archive copy is placed.

- Rename Pattern (String) - How should we name the archive file? Rename patterns support the following tokens delimited by < and >:

<filename>is the full original file name (e.g. 'abc.v1.txt')<basename>is the file with the last extension stripped (e.g. 'abc.v1')<ext>is the last file extension (e.g. 'txt')<ts:dateformat>corresponds to a timestamp formatted in the supplied SimpleDateFormat pattern (e.g.<ts:yyyy-MM-dd-HH-mm:ss>)- other literals outside of < or >, or inside of < and > but not matching any preceding tokens.

- For example, to archive a GZIP version of a file with a timestamp with the extension 'gz', you can use the rename pattern

<basename>.<ts:yyyy-MM-dd-HH-mm-ss>.<ext>.gz. - To maintain the original name, simply use the pattern

<filename>.

- Delete Original File (Boolean) - Should we remove the original file after archiving?

- GZIP (Boolean) - Should the target archive file be compressed using GZIP?

- Overwrite Target File (Boolean) - If the archive file to be created already exists, should we overwrite it, or fail instead?

- File Size Minimum (String) - The minimum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb). As of Obsidian 6.0.1

- File Size Maximum (String) - The maximum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb). As of Obsidian 6.0.1

- Minimum Time Since Modified (String) - The minimum age of a file to be deleted, based on the modified time. This can be specified in seconds, minutes, hours and days (e.g. 10s, 5m, 24h, 2d). As of Obsidian 6.0.1

Customization

If you wish to tweak or customize behaviour of this job, it can be subclassed. The following methods may be overridden or extended by calling super methods:

- onStart - Called on job startup.

- onEnd - Called on job end, regardless of success or failure.

- determineArchiveFilename - Determines the name of the archive file based on the configured "Rename Pattern".

- processFile - For each input file, determines the archive file name and copies the original file to the archive.

- processDelete - If the original file is to be deleted, this method handles deleting the original file. If deletion fails, the job will fail, so if you wish to change his behaviour, you can override this method.

Sample Configuration

File Scanner Job

Job Class: com.carfey.ops.job.maint.FileScannerJob

Introduced in version 2.2

This job locates files in a directory matching one or more file masks (using Java regular expressions) and stores the result via source job results stored under the name file as absolute paths (Strings).

This job is intended to be conditionally chained to a job that knows how to process the results.

Go File Pattern may used to ensure that files in the configured Directory are only picked up and saved if an expected token file exists. If Delete Go File is set to true, any found token files will be deleted. If this deletion fails, the job will abort and no file results are saved. Successfully deleted go files are stored as job results under the name deletedGoFile.

The job supports the following configuration options:

- Directory (String) - A directory to scan for files.

- File Pattern (1 or more Strings) - One or more file patterns (using

java.util.regex.Pattern) that a file name must match to be considered a match. If any one of the configured patterns is a match, the file is considered a match. - Go File Pattern (String) - A file pattern (using

java.util.regex.Pattern) that must match at least one file in in the Go File Directory (or main Directory if not supplied) in order for files to be processed and saved as job results. - Go File Directory (optional String) - The directory where we look for files matching the Go File Pattern. If missing, the value for Directory is used.

- Delete Go File (Boolean) - If we find one or more go files, should we delete them? If deletion fails, the job will fail.

- File Size Minimum (String) - The minimum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb). As of Obsidian 6.0.1

- File Size Maximum (String) - The maximum size in bytes a file must be to be deleted. File sizes can be specified in bytes, kilobytes, megabytes or gigabytes (e.g. 10b, 100kb, 20mb, 2gb). As of Obsidian 6.0.1

- Minimum Time Since Modified (String) - The minimum age of a file to be deleted, based on the modified time. This can be specified in seconds, minutes, hours and days (e.g. 10s, 5m, 24h, 2d). As of Obsidian 6.0.1

Customization

If you wish to tweak or customize behaviour of this job, it can be subclassed. The following methods may be overridden or extended by calling super methods:

- onStart - Called on job startup.

- onEnd - Called on job end, regardless of success or failure.

- listFiles - Lists files within the directory using the supplied patterns.

- postProcessFiles - This is a hook method called after all job results are saved and go files are deleted if applicable. It is called with all valid files that were found.

- deleteGoFiles - Deletes all found go files, saving a result if successful and throwing a RuntimeException if failing. This can be overriden to customize handling of failures.

Sample Configuration

Maintenance Jobs

These jobs are provided to help maintain the Obsidian installation. Automatic schedule configuration can be disabled via com.carfey.obsidian.standardOutputStreamsEventHook.enabled=true configuration.

Job History Cleanup Job

Job Class: com.carfey.ops.job.maint.JobHistoryCleanupJob

This job will delete job history and related records beyond a configurable age in days. This is useful for keeping the database compact and clearing out old, unneeded data.

The value for maxAgeDays corresponds to the maximum age for records to retain. By default it is set to 365, but you may configure it to any desired value.

As of 3.0.1, the value for maxAgeScheduleDays corresponds to the maximum age for expired job schedules to retain. If this isn't specified, expired job schedules won't be deleted.

As of 3.4.0, the value for maxAgeDays is used to prune expired job schedules and maxAgeScheduleDays is no longer used.

Note: We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time.

As of Obsidian 5.2.1, this job is configured during new installations to run '@daily' keeping the last 380 days of history.

Log Cleanup Job

Job Class: com.carfey.ops.job.maint.LogCleanupJob

This job will delete log database records beyond a configurable age in days. This is useful for keeping the database compact and clearing out old, unneeded data.

The value for maxAgeDays corresponds to the maximum age for records to retain. By default it is set to 365, but you may configure it to any desired value.

The value for level corresponds to the logging level of events to delete. You may specify multiple values, and the "ALL" option will result in all records matching the age setting being deleted. Valid levels to configure are: FATAL, ERROR, WARNING, INFO, DEBUG, and TRACE.

It is common to only delete lower severity level events, such as INFO, DEBUG, and TRACE, but retain higher severity messages.

Note: We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time.

As of Obsidian 5.2.1, this job is configured during new installations as follows:

- 1:00 AM for DEBUG/TRACE keeping the last 30 days of event logs.

- 1:30 AM for INFO keeping the last 60 days of event logs.

- 2:00 AM for WARNING/ERROR keeping the last 120 days of event logs.

- 2:30 AM for FATAL keeping the last 185 days of event logs.

Notification Cleanup Job

Introduced in Obsidian 4.9.0

Job Class: com.carfey.ops.job.maint.NotificationCleanupJob

This job will delete notification database records beyond a configurable age in days. Optionally you may constrain this to specific subscribers. This is useful for keeping the database compact and clearing out old, unneeded data.

The value for maxAgeDays corresponds to the maximum age for records to retain. By default it is set to 365, but you may configure it to any desired value.

The value for subscriber corresponds to the subscriber address(es) of whose notifictaions should be deleted. No validation is performed against this value to allow for subscriber changes without requiring corresponding job configuration changes.

Note: We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time.

As of Obsidian 5.2.1, this job is configured during new installations to run at 3:30 AM on the 1st of the month keeping the last 120 days of history.

Disabled Job Cleanup Job

Introduced in Obsidian 3.7.0

Job Class: com.carfey.ops.job.maint.DisabledJobCleanupJob

This job cleans up jobs that have been disabled for the last configured number of days and have no future non-disabled schedule windows.

The value for maxDisabledDays corresponds to how many days in the past a job must have been disabled to be a candidate for being deleted.

Note: We recommend high volume users schedule the job weekly or less frequently and to schedule it for a non-peak time.

As of Obsidian 5.2.1, this job is configured during new installations to run at 3:00 AM on the 1st of the month keeping the last 185 days of history.

Script Job

This job is a convenience job to execute any script, command or other executable file on any platform.

Job Class: com.carfey.ops.job.script.ScriptFileJob

Introduced in Obsidian 2.3.1

This job enables you to specify an existing script, command or other executable to invoke/execute. Validation of the file's existence and executable state is done only at runtime. You must ensure that the file exists or is accessible via environment variables and in an executable state on all Obsidian Hosts in the cluster or restrict the job to the necessary Fixed Hosts. This job uses ProcessBuilder, so refer to it for expected behaviour.

As of Obsidian 3.8.0, this job supports best-effort interruption as an Interruptable job. It is best effort in that it only destroys the underlying process that invoked the script, but cannot ensure that anything the script has invoked has terminated.

- Script with Arguments (1 or more Strings) - The first value is a path to the execution script (or other executable), which is typically fully qualified, or relative to the working directory. The additional values are any required arguments for the command. On *nix, if you are invoking a script, you may need to prefix the command with "./", e.g.

./script.sh. Corresponds to the ProcessBuilder(java.lang.String...) constructor. - Copy Obsidian Process' Environment (Boolean) - Do you want the runtime environment to be copied from that which is running the Obsidian process? Defaults to true. If set to false, the environment is cleared. See ProcessBuilder.environment().

- Success Exit Code (Integer) - The exit code that designates success. Any exit code not matching this value will result in a job failure. Defaults to 0.

- Working Directory (String) - The desired working directory for the script. If no special working directory is required, set to the directory containing the target script. See ProcessBuilder.directory().

- Environment Parameter Key Value Pairs (0 or more pairs of Strings) - Key/Value pairs to be set on the execution environment. These values are set just before execution and will override any existing values copied from the Obsidian Process if Copy Obsidian Process' Environment was set to true. See ProcessBuilder.environment().

Sample Configuration

Note on Windows

When running the job on Windows, users often encounter an error like the following:

Cannot run program "run.bat" (in directory "C:\test"): CreateProcess error=2, The system cannot find the file specified.

This normally means you need to run the command through the Windows command interpretor, using cmd /C.

To do this, instead of supplying the file to execute as a first value for Script with Arguments, the supply the values "cmd" and "/C" as separate values, then any others you require, as illustrated below.

Script with Arguments: cmd /C run.bat

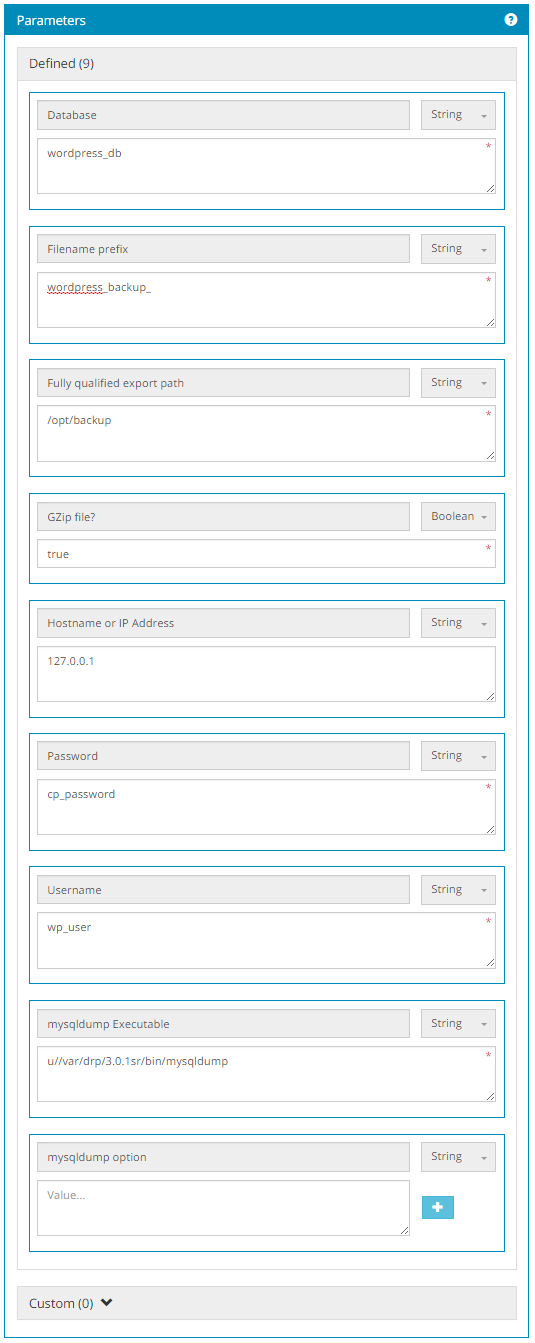

MySqlBackupJob

Introduced in version 2.4

This is a convenience job to utilize the mysqldump utility to extract a backup and store on the filesystem. A timestamp is appended to the filename prefix specified to protect against overwrites. The optional mysqldump options are not validated and will result in runtime failures if they are invalid. Likewise, all paths specified are not verified and are only required to be available on the scheduler host at runtime. If the mysqldump executable is not found at runtime, change the mysqldump Executable to have the fully qualified path.

- Fully qualified export path (String) - The directory where you want the backups stored.

- Filename prefix (String) - The filename prefix to use for the backup files.

- Username (String) - The user for the mysqldump command.

- Password (String) - The user's password for the mysqldump command.

- Database (String) - The database to backup.

- Hostname or IP Address (optional String) - The host of the MySQL database. If not provided, assumes localhost.

- GZip file? (Boolean) - Determines whether the backup file is compressed using GZip.

- mysqldump option (0 or more Strings) - Any number of desired mysqldump options can be specified.

- mysqldump Executable (String) - Fully qualified path to mysqldump executable.

Sample Configuration

Shell Script Jobs

Deprecated as of Obsidian 2.3.1. Use Script Job instead.

These jobs are provided to give convenient access to shell scripting. Not supported on Windows-based platforms.

Shell Script Job

Deprecated as of Obsidian 2.3.1. Use Script Job instead.

Job Class: com.carfey.ops.job.script.ShellScriptJob

Introduced in version 2.0

This job enables you to specify the actual contents of a shell script and have it execute on the runtime host. At runtime, the script will be written to disk, marked as executable and then executed. The script is then cleaned up. The shell script provided should be completely self-sufficient with any desired interpreter directives included and no assumptions or expectations of environment variables.

Shell Script Execution Job

Deprecated as of Obsidian 2.3.1. Use Script Job instead.

Job Class: com.carfey.ops.job.script.ShellScriptExecutionJob

Introduced in version 2.0

This job enables you to specify an existing shell script to execute. Validation of the script's existence and executable state is done only at runtime. You must ensure that the script exists in an executable state on all Obsidian Hosts in the cluster or restrict the job to the necessary Fixed Hosts.

Miscellaneous Jobs

Database File Export Job

Job Class: com.carfey.ops.job.db.DatabaseFileExportJob

Introduced in Obsidian 5.1.0

This job produces a file export based on a database connection and a query.

The job supports the following configuration options:

- SQL Query (String) - The source query that produces the desired export results.

- JDBC Connection Provider (optional Class) - A class implementation of DatabaseConnectionProvider that provides a connection - allows for use of an existing connection pool or other means of obtaining a connection. If not used, must used the URL/username/password configuration for connections.

- JDBC DB URL (optional String) - JDBC URL. Relies on JDBC driver class being available on the classpath. If not used, must used the JDBC Connection Provider for connections.

- JDBC DB username (optional String) - JBDC database username. Relies on JDBC driver class being available on the classpath. If not used, must used the JDBC Connection Provider for connections.

- JDBC DB password (optional String) - JBDC database password. Relies on JDBC driver class being available on the classpath. If not used, must used the JDBC Connection Provider for connections.

- File type (String) - One of CSV, JSON, XML.

- File name pattern (String) Filename patterns for the generated filename support the following tokens delimited by < and >:

<custom:paramname>is a means of automatically substituting in other parameters defined on the job.<ext>is the last file extension (e.g. 'txt')<ts:dateformat>corresponds to a timestamp formatted in the supplied SimpleDateFormat pattern (e.g.<ts:yyyy-MM-dd-HH-mm:ss>)- other literals outside of < or >, or inside of < and > but not matching any preceding tokens.

- CSV Delimiter (optional String) - If CSV export is selected, can choose an alternative delimiter other than comma (,). Single character.

- CSV Quote Character (optional String) - If CSV export is selected, can choose an alternative quote character other than double quotes ("). Single character.

- CSV Escape Character (optional String) - If CSV export is selected, can choose an alternative escape character other than double quotes ("). Single character.

- CSV Line End String (optional String) - If CSV export is selected, can choose an alternative line end string other than newline (\n).

- Datetime format (String) - SimpleDateFormat format applied to java.sql.Timestamps.

- Date format (String) - SimpleDateFormat format applied to java.sql.Dates.

REST Invocation Job

Job Class: com.carfey.ops.job.rest.RESTInvocationJob

Introduced in Obsidian 5.1.0

This job calls a REST endpoint using the designated method and payload, if applicable. Expects the response code to be in the 2xx series to be a success, all others are treated as failures. Expects payload and response to always be Strings. Supports basic authentication. Response is saved to job results to allow for use by chained jobs.

The job supports the following configuration options:

- URL Target (String) - Endpoint URL.

- REST API Method (String) - GET, PUT, POST, DELETE supported.

- Payload (optional String) - Required for PUT/POST, not permitted for GET/DELETE.

- Basic Authentication Username (optional String) - If wanting to add basic authentication, provide username and password.

- Basic Authentication Password (optional String) - If wanting to add basic authentication, provide username and password.

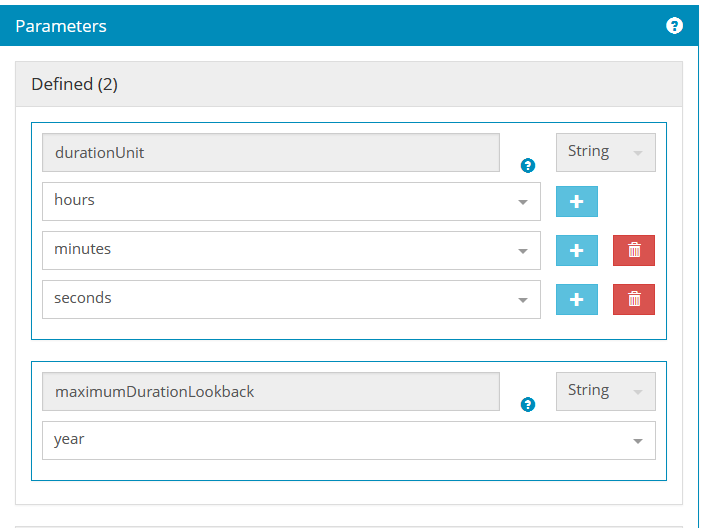

Obsidian Execution Statistics Job

Job Class: com.carfey.ops.job.schedule.ObsidianExecutionStatisticsJob

Introduced in Obsidian 6.4.0

This job runs against the job activity stored in Obsidian to collect execution statistics. These statistics are then available via the UI, the REST API and Embedded API. Job statistics use the data stored in job activity (history) and will not collect statistics for any history removed via Job History Cleanup Job. Likely you should align the maximumDurationLookback configuration value with a value that represents the most data kept. For example, if you keep 6 months of job history, you wouldn't benefit from a year lookback as the stats would match the six month stats.

The job supports the following configuration options:

- durationUnit (String) - What statistics unit to calculate. Valid values: seconds, minutes, hours. Defaults to minutes. Multiple allowed.

- maximumDurationLookback (String) - How far back to collect statistics. One of day, two day, three day, five day, week, month, two month, three month, six month, year. Defaults to three month. Large durations will calculate and store stats for smaller durations; e.g. two day includes day.